Driver Activity Recognition to advance context awareness of on-the-market ADAS systems

Authors:

Elvio Amparore (1), Marco Botta (1), Susanna Donatelli (1), Idilio Drago (1), Maria Jokela (2)

Institutions:

(1) University of Torino, Computer Science Department, Italy

(2) VTT Technical Research Centre, Finland

Current on-the-market Advanced Driving Assistance Systems (ADAS) work mainly considering the road environment, but not the driver’s attention. For instance, a Lane Keeping Assistance (LKA) system applies steering torque towards the center of the lane when the car diverges from the current lane. Typically, this divergence condition is not enough to discern if this is a risky situation or if it is an explicit intention of the driver, resulting in a system that raises several false positives.

There are two undesired consequences of false positives: 1) an unnecessary torque or alert sound happening while the driver is diligently performing a maneuver may actually distract the driver, and 2) if too many torques from LKA are perceived by the driver as unnecessary, they may decide to disable the LKA. Other ADAS may take advantage of knowing the driver’s attention state too, like emergency braking – as for LKA, the emergency brake alert on an attentive driver may result in risky situations.

At the University of Torino, we have developed in the NextPerception project a novel AI system for Driver Activity Recognition (DAR) that accurately detects and recognizes secondary actions associated with distracted driving, such as texting, eating or using electronic devices. The solution, named NextPDAR, combines several advanced techniques to limit the amount of data needed for its training. The NextPDAR system is meant to introduce context-awareness in ADAS, so that its human-machine interface can modulate the automatic system intervention according to the driver’s attention level.

In the NextPDAR system, a frontal RGB camera (or an Infrared camera to operate in both day and night conditions) monitors the driver state to detect if they are performing secondary actions. Building a precise DAR based on cameras is a computer vision problem, which requires a large amount of data for its development. This is particularly critical when considering the data-hungry deep neural networks. To have a system that generalizes to multiple subjects, situations and activities, many (potentially sensitive) data samples need to be collected. Such data can only be collected from participants that are fully aware of its usage, to comply with current regulations.

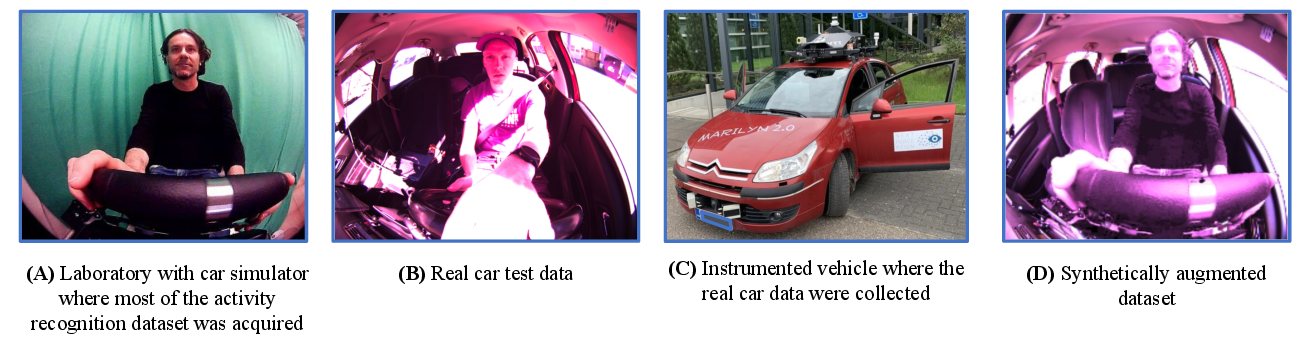

Therefore, to reduce the amount of data and increase the generalization of the AI system, at the University of Torino we have devised a transfer learning strategy that is combined with advanced data augmentation techniques. Data has been mostly collected in the laboratory (A). A much smaller amount of data has been taken in a real-life setting – images like (B), taken from the interior of VTT’s instrumented car (C). Computer graphics techniques have been applied to replicate the car’s internal environment on the data collected in the lab, resulting in images like (D). In this way, many distracting actions have been recorded in a safe laboratory setting, reducing the experimental cost while producing data that replicates in-car conditions.

Data transformation pipeline to achieve dataset transfer from lab to car conditions.

The NextPDAR system uses a deep learning architecture based on transformers, fine-tuned from the state-of-the-art SwinTransformerV2 architecture [1]. NextPDAR achieves excellent scalability and adaptability. We fine-tuned the AI component using the dataset produced in the laboratory, testing the performance of the obtained model with data from real subjects collected in VTT’s instrumented car. The system, trained on 34 laboratory subjects and just 1 car driver, achieves high accuracy when running on not-seen-before subjects driving in the real car (accuracy of 91.2%) and performing both attentive driving as well as distracting activities.

NextPDAR module in the VTT car, trained using transformed laboratory data.

An important technological aspect of modern AI is that it is intrinsically uncertain and prone to non-negligible residual errors. It is not unusual that highly accurate AI systems based on deep learning components for computer vision tasks still have error rates above 10% or even more. For example, the current best AI model (BASIC-L, 2023) for the most famous benchmark in image classification (ImageNet) achieves [2] accuracy of 91.1%.

Such accuracy levels are not enough for safety-critical systems. We therefore improved NextPDAR to compute the classification confidence of the AI system and decide whether to trust or not on the classification.

By discerning and discarding low-confidence classifications, the NextPDAR AI module developed by the University of Torino for the NextPerception improves from 91.2% to 95.4% accuracy, removing several incorrectly classified conditions and allowing the system to be more predictable and useful.

[1] The Swin Transformer V2 model was proposed in Swin Transformer V2: Scaling Up Capacity and Resolution by Ze Liu, Han Hu, Yutong Lin, Zhuliang Yao, Zhenda Xie, Yixuan Wei, Jia Ning, Yue Cao, Zheng Zhang, Li Dong, Furu Wei, Baining Guo.

[2] https://paperswithcode.com/sota/image-classification-on-imagenet